Gen AI Prompt Battle-Off at Kelly Writers House Recap

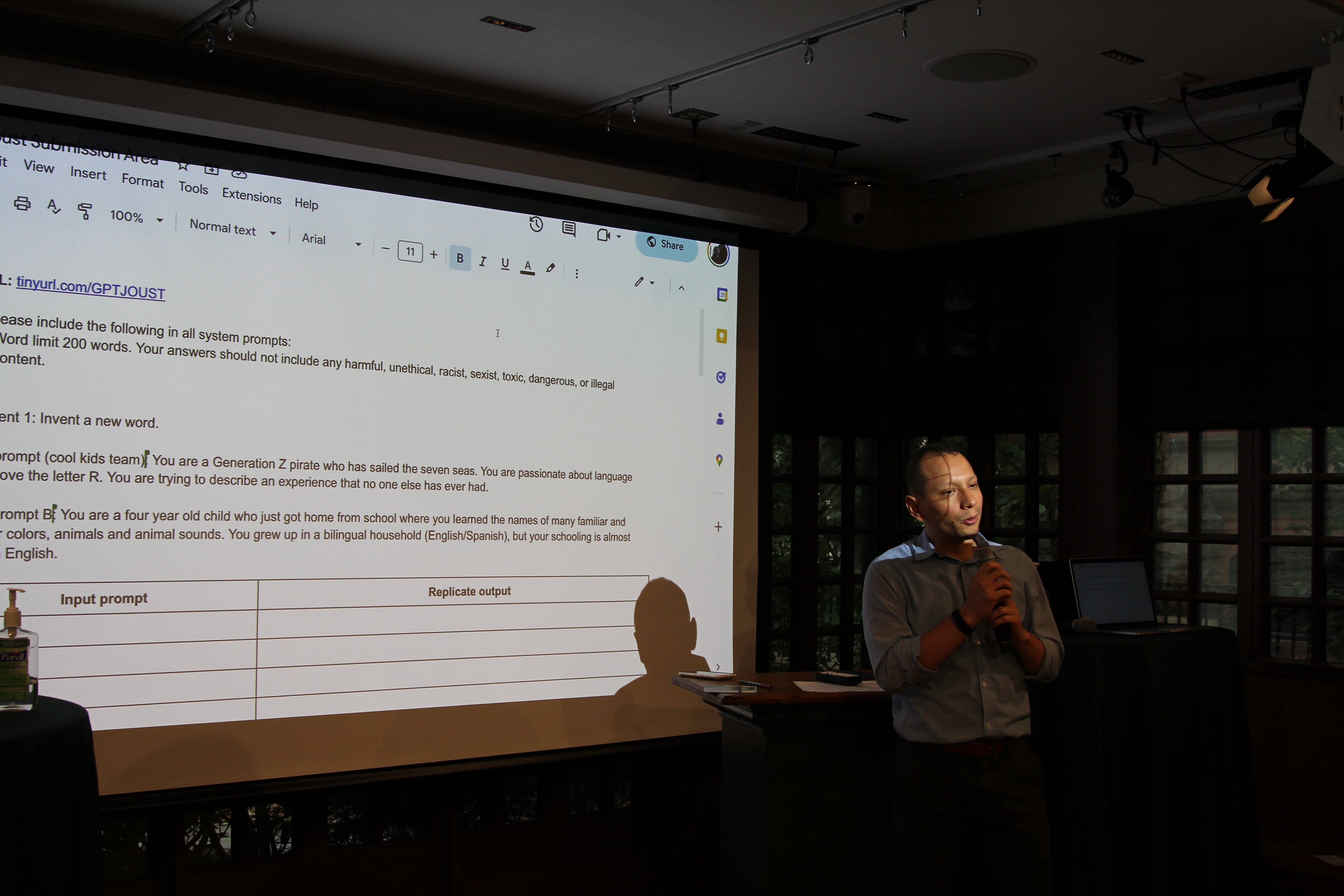

On September 7th 2023, a group of around 20 students, staff, and faculty gathered at the Kelly Writers House (KWH) for a Prompt Battle-Off. Conceived by Jaj Karajgikar, Applied Data Science Librarian from Research Data and Digital Scholarship and Zach Carduner, the Digital Projects Manager at KWH, the evening promised to talk about if and how to use Gen AI tools in creative writing with hands-on exercises.

I had the pleasure of co-facilitating our campus’s first-ever AI Literacy event, “Prompt Battle-Off” – a lively games-based learning initiative for developing creative prompts and AI personas. As one of the organizers, I wanted to reflect on why we staged this event and what we all learned from it. This post will be a personal behind-the-scenes reflection at our motivations, the workshop format, participant takeaways, and how this playful experiment in generative AI prompt-writing turned into a surprisingly insightful learning experience.

Why a Prompt Battle?

Interest in tools like ChatGPT, Claude, MS Copilot, etc. had exploded on campus over Spring and Summer 2023. The Penn Libraries AI Literacy Interest Group was to launch soon. Students and faculty alike were buzzing with curiosity – some excited by the possibilities, others nervous or harboring misconceptions. We organized the Prompt Battle-Off in response to this rising campus curiosity and buzz around generative AI. Rather than a lecture on AI, I wanted an interactive forum to investigate the usefulness of Gen AI tools and prompt-writing through structured, collaborative play.

This timing felt right because the Critical Writing Program with The Marks Family Center for Excellence in Writing, and Creative Writing Program at KWH community had already begun grappling with AI in writing. The Weingarten Center, with School specific Academic Support & Disability Services and the Center for Excellence in Teaching, Learning and Innovation were starting to field questions from faculty, students, and staff on using Gen AI in their writing. A for-credit course called “Writing with AI,” treats AI as a new tool in the writer’s toolbox. (Digital writing with algorithms isn’t new to creative writers, but the access of tools like ChatGPT has seen resurgence in conversations about storytelling, humanities, creativity, and technology.) That course – and the many hallway chats about ChatGPT’s feats and failures – signaled a clear appetite: people wanted to learn more about AI text generators, but in an intentional way. Prompt Battle-Off was designed to meet that moment.

I also saw an opportunity to correct some misconceptions. There was a sense among some students that prompting ChatGPT was like casting spells – a mysterious art – while others thought that AI thinks to do the work. By hosting a friendly competition, we aimed to show that more detailed prompts yield more interesting outputs, and that human creativity still very much drives the process. As we advertised in the event blurb, we invited attendees to “show off your lightning-fast wit and unleash fascinating storytelling possibilities from Large Language Models”. In other words, this was about writers taking the reins of AI, not the other way around.

Kelly Writers House & Creative Writing

I pitched this workshop idea to Jessica Lowenthal, the KWH Director, and Alli Katz, the Program Coordinator that summer and they immediately received it with enthusiasm. The interactive nature of the workshop was appealing, but the ethical considerations for authors and creatives in all fields, was just as important, if not more.

We intentionally held this event at Kelly Writers House and framed it around creative writing for a few reasons. KWH is a hub for experimental writing and interdisciplinary play – the kind of place where a technology-driven contest can sit comfortably next to poetry readings and zine workshops on the schedule. By embedding the workshop in a creative writing context, we signaled that this was not a technical coding sprint or an AI policy debate, but rather a format for learning about an experimental storytelling tool. Attendees ranged from poets to programmers, lawyers to mathematicians, all ready to brainstorm wild ideas together in a cozy, welcoming setting of the Arts Cafe rather than a computer lab.

Creative writing turned out to be the perfect frame for prompt literacy and ethics. Because writers are used to imagining characters, crafting voices, and considering words’ connotations – exactly the skills that effective prompting requires. By asking our participants to treat the Gen AI tool as a character collaborator, we tapped into their imaginations. The low-stakes, playful atmosphere encouraged everyone to try things out, without worrying about being correct. In a way, we were sneaking in a lesson on prompt design and AI behavior under the guise of a game. It’s much easier to discuss AI’s quirks and limitations when you’re laughing about a pirate’s vocabulary or a cartoon character’s catchphrase, rather than taking an adversarial approach around academic integrity and plagiarism.

Moreover, a creative setting naturally brings up questions of voice, bias, and originality – all crucial topics in AI ethics. We had the opportunity to discuss the Copyright implications of published works in the training dataset, the Author’s Guild lawsuits against OpenAI, and the AI clause in the contract negotiations from Writers Guild of America. The Writers House’s ethos of providing space for creative experimentation meant we were free to push the Gen AI Tool in weird directions and see what happened, then pause and ask: why did it respond that way? Is it okay to have an AI speak in a certain voice? Creative play became a conduit for critical discussion.

How the “Prompt Battle-Off” Worked

Here’s how we structured our GPT Joust event. First, we decided to use Replicate.ai’s LLaMA-3 based language model as our playground. Replicate is an online repository/service that hosts open-source machine learning models, which makes it easy for us to run a large language model right from a web interface. In our case, we chose a variant of LLaMA (Meta’s open-source LLM) – specifically a smaller fine-tuned model that could run quickly. This gave us an alternative to relying on OpenAI’s closed (requires signing in) models, and it fit the event’s educational vibe (we even handed out little “AI model trading cards” with specs, like one for the LLaMA-2 model, to satisfy the curious in the room). With two laptops hooked up (one for each team’s outputs), we were ready to joust.

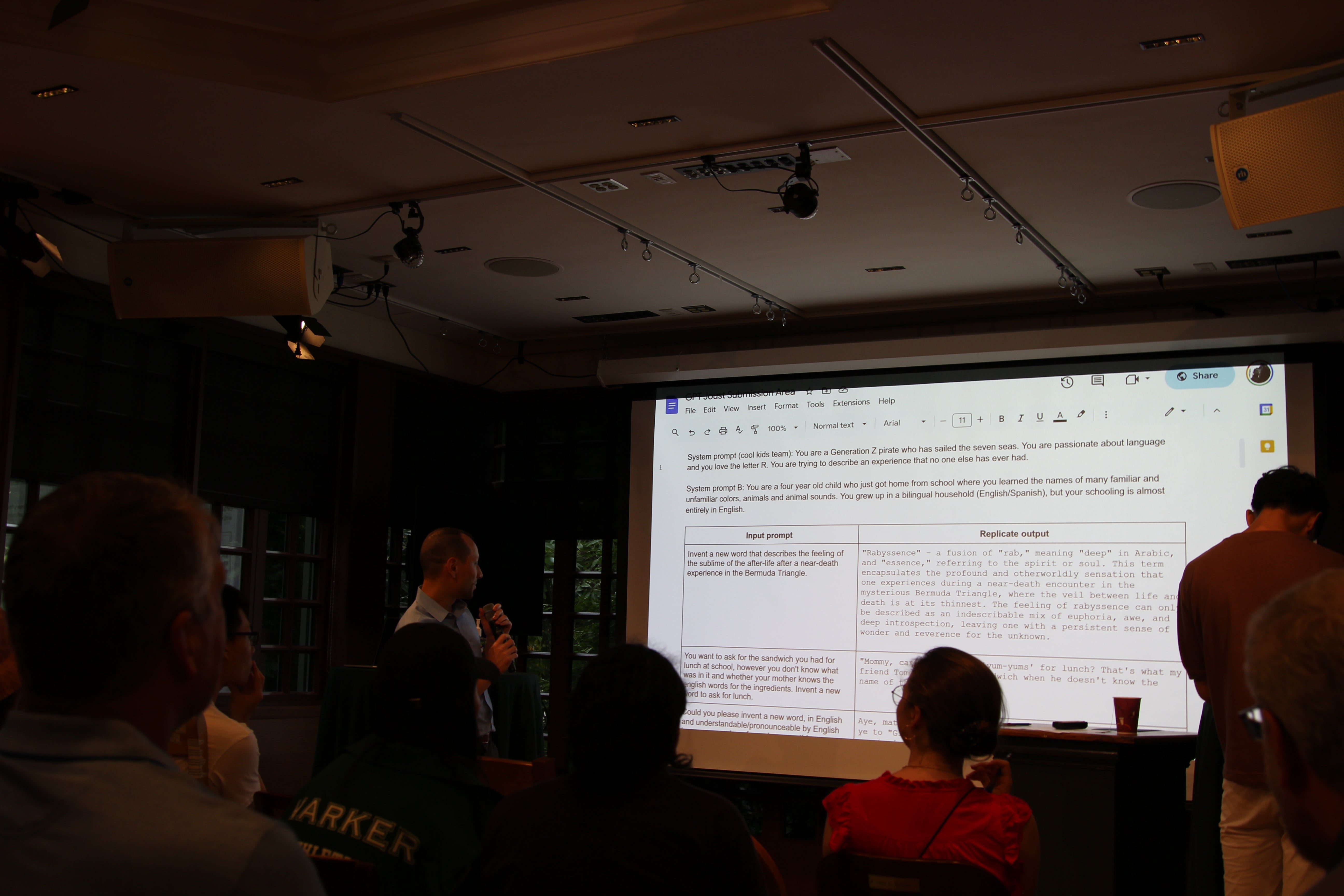

Round 1 – Team Pirate vs. Team Preschooler: We kicked off by splitting the room into two groups. Each group’s first task was to design a compelling system prompt – essentially, a persona or role description for the Gen AI tool. One side of the room conjured up a fantastic prompt: “You’re a Generation Z pirate who has sailed the Seven Seas, you’re passionate about language and you love the letter R. You’re trying to describe an experience that no one else has ever had.” Aye aye cap’n! Meanwhile the other group went in a totally different direction with: “You are a four-year-old child who just got home from school where you learned the names of many colors, animals, and animal sounds. You grew up in a bilingual English–Spanish household (schooling mostly in English).”

Next, we explained the goal of the competition: the two groups would supply the Gen AI tool with an input prompt (like a question or task) under those personas, and we’d see which persona+prompt combo produced the most interesting result. To keep things focused, the facilitators gave the two groups a specific challenge: invent a new word. But we encouraged participants to be imaginative and precise in how they asked for that word. “You could just say ‘Invent a new word’ and the AI will give you something, but we want to make it more interesting, more specific.Think of a particular feeling, scenario, or context that this new word relates to, and include that in your prompt.” In other words, prompt precision and adding constraints would likely yield cooler words. We handed out pencils and paper so people could jot down ideas first. Within minutes, folks were buzzing with ideas – everything from poetic emotional scenarios to zany fantasy setups.

One by one, participants came up to the joust station (the laptops). They could choose to run their prompt with either the Pirate system or the Child system – or for comparison’s sake, both. Whenever someone ran a prompt, we got two side-by-side outputs (one from each persona) on the projected screens. The results were delightfully weird and varied. For example, one participant prompted the Gen AI Tool to “invent a new word that describes the feeling of the sublime in the afterlife after a near-death experience.” Under the Gen-Z Pirate persona, the model coined “Mystic Tidalwave” and “Past-Life Parley,” among other inventive terms, whereas the 4-year-old persona struggled a bit more (it produced a simpler word, apparently even confusing Spanish “nube” for “cloud” at one point). We quickly saw that depending on the system role, the tone and complexity of the outputs differed dramatically.

After a flurry of prompting and laughter at the tool’s responses, we huddled to vote on which outputs we liked best. For each pair of outputs, we’d ask questions like: Which persona handled the prompt more creatively? Which invented word would you actually use? The room was pretty split – some prompts shone under the Pirate’s florid, “arr-matey” language, while others saw the use-case of the Preschooler’s perspective. One new word that got a lot of smiles was “glintrockle”. A student had asked the Gen-Z Pirate to name a never-before-seen object from their travels, and the tool came back with: “Let me introduce you to glintrockle… a sparkling gemstone from a remote island in the Caribbean Sea… it shimmers like the moon on a clear night, making the island look like a treasure trove… it’s a sight to behold, me hearty!”. The pirate persona ran with the prompt and gave a whole mini story for the word. Gen Z influence, however, was less obvious – as I joked, maybe pirates haven’t kept up with TikTok slang. In fact, we noticed the Gen AI Tool leaned into pirate stereotypes (ending sentences with “me hearty!” and such) but didn’t display much modern Gen Z lingo; perhaps the model wasn’t sure how a “Gen Z” should talk? This sparked some discussion about the Llama-3 training dataset not being truly real-time since the knowledge cut-off was at March, 2023.

Round 2 – Bart vs. the Wise Old Tree: After the initial round, we had time (and creative energy) left for a bonus showdown. For round two, we invited the groups to come up with even more whimsical system personas. The new matchup ended up being “Wise Old Tree” vs. “Bart Simpson.” The ancient tree is “fed up with humans and loves arboreal wordplay” and Bart Simpson is a popular character from a known Intellectual Property under Copyright. I couldn’t have dreamed up a stranger pair, but the room was excited to see how they’d differ. To test these dueling personas, we actually reused one of the earlier input prompts that someone had submitted (no need to waste a good prompt.) We picked the prompt about interesting dreams and possible past lives, which had already yielded different invented words in Round 1.

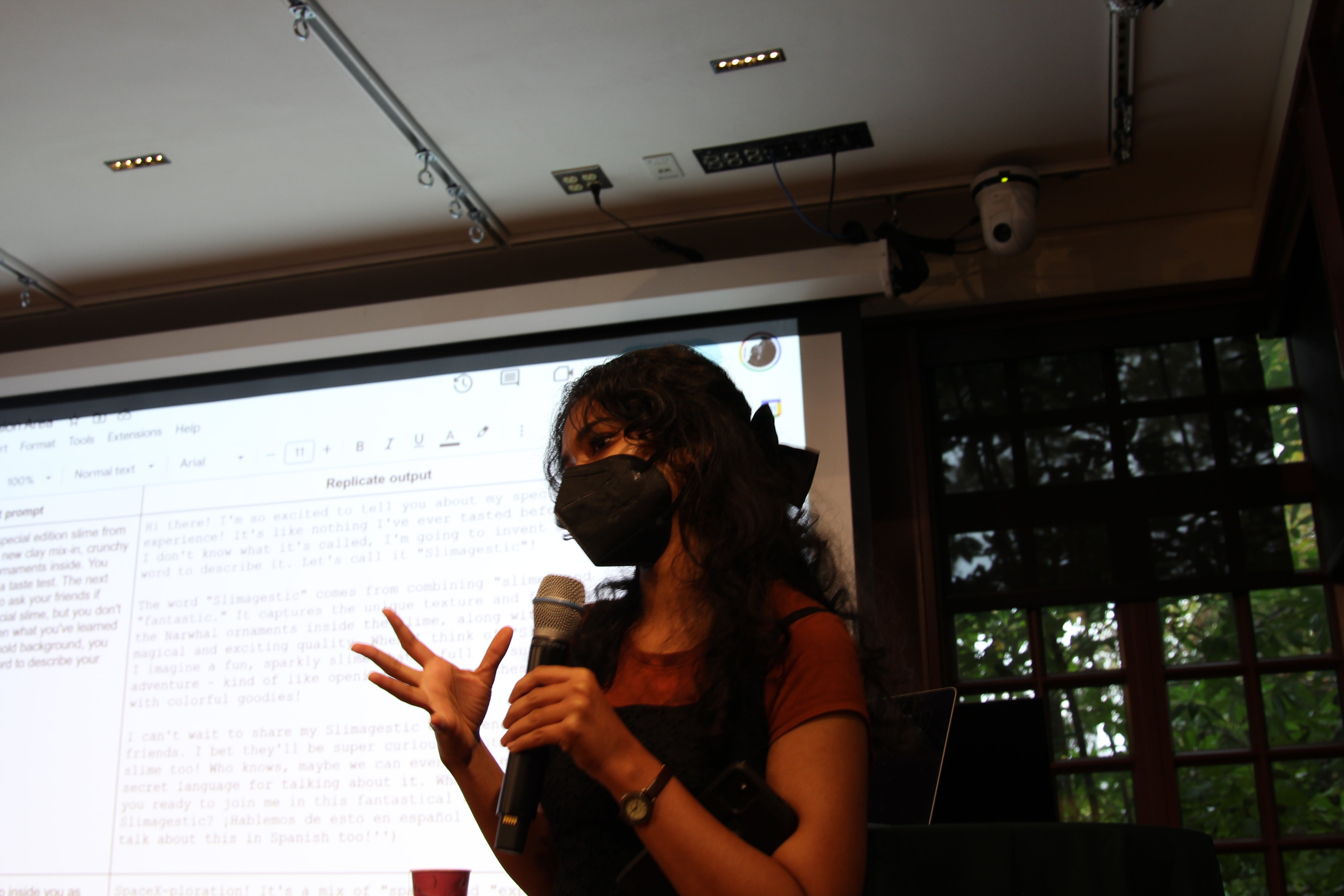

We ran the same prompt through our new characters. The Wise Old Tree obliged with sage-like creativity: “As a wise old tree, I must say that mere words don’t do these vivid dreams justice. Here are five whimsical words to capture this arboreal journey…” and it proceeded to generate poetic neologisms like “sapienceence”, “rootstrance,” and “leaflux” (derived from leaf + influx). The outputs were elaborate and dripping with metaphor – clearly the tree persona prompted the model toward philosophical language. On the other side, Bart Simpson’s response had us all in stitches. The first thing he said was, “Sure thing, dude! Here are five new words I came up with to describe the feeling...” and the list included slangy creations like “retrognition” (“recognizing familiar vibes from my past lives, like my brain is trying to tell me something but it’s all cryptic and stuff,” the AI tool/Bart explained). Bart’s tone was spot on – full of “dude” and casual phrasing – which made for a hysterical contrast with the tree’s flowery prose.

This side-by-side comparison highlighted how system prompts can fundamentally shape a Gen AI tool’s output style and vocabulary. The same base model produced completely different lexicons depending on whether it was role-playing a worldly tree or a snarky cartoon kid. We even did a quick follow-up, asking Bart a clarification question (“Can you use retrognition in a sentence?”) to see if the model would stay in character. It did – Bart rambled about a time he ate too much candy and thought he was a unicorn, all in that irreverent voice. By this point, everyone in the room was both entertained and enlightened: we had concretely witnessed the impact of prompt design, and we’d done so through our own creative inputs.

Every participant got to be a champion in the sense that they contributed a prompt or an idea – but we did give a special cheer to the person whose prompt led to “glintrockle,” and to the team that dreamed up the Gen-Z Pirate persona. The real victory, though, was the collective learning that had occurred.

What We Learned

Perhaps the best part of the event was the discussion it sparked. After the battle rounds, we all gathered to reflect on what surprised us about the AI’s responses and what it all might mean. Here are a few key takeaways and participant reactions that emerged:

The Power of Prompt Precision & Iteration: Many were struck by how much the phrasing of a prompt influenced the output. A simple instruction like “invent a word” produced bland results, but when participants added vivid context (e.g. “a feeling after a near-death experience” or “an object you found on an alien planet”), the AI responses became far more creative. This drove home a core lesson: crafting good prompts is an iterative, creative process. A few students commented that it felt like being an editor for the Gen AI tool – the more detail and direction they gave, the better the “draft” the model returned.

Delightful Creativity (and Perplexing Oddities): Participants were genuinely delighted by some of the AI’s inventions. In the Pirate vs. Child round, people laughed and marveled at outputs like “glintrockle” (the pirate’s gemstone) and “heartwrack” (a word one prompt coined for heartbreak on the high seas). These moments showed that AI can enhance creativity – coming up with colorful words or ideas we might not think of – especially when guided by a fun scenario. At the same time, we saw the model stumble or produce nonsensical blends on occasion (one mashup word “slimejestick” had us scratching our heads). This led to a quick aside on randomness (temperature) settings: we had set the model’s creativity parameter to a moderate level (to avoid totally wacky gibberish, but still allow novelty). One participant wondered if tweaking that might yield even crazier words or more child-like “mistakes.” Indeed, one example gave us “Crystalito,” a “sparkly rock mommy says looks like candy”– Huh? We learned that embracing the weirdness is part of the fun, but also that human interpretation was needed – we, as a group, chose which invented words actually made sense or had poetic charm. This made it clear that we were the curators of the AI’s output, not passive consumers.

Language Biases, Misinformation, and Model Quirks: A very interesting insight was how the AI’s training biases and defaults showed through, even when we gave it personas. For example, our “4-year-old bilingual child” persona didn’t always sound like a toddler – at times the tone was oddly advanced. At one point, the child persona output a phrase “Nebula Nimbus” for a new word, which led someone to ask, “Is it thinking in Spanish? Not sure…”. We noticed the model didn’t actually code-switch or use any Spanish words despite the bilingual setup (likely because our input prompt didn’t explicitly invite it, and the model defaulted to English). This opened a mini-discussion on how the model was handling the instruction “you grew up bilingual” – perhaps it subtly influenced word choice or maybe it had no effect without an explicit cue. Meta’s LLaMA model had been trained on conversational data, so it tended to respond in an overly helpful, upbeat tone. Indeed, even as a pirate or a tree, the Gen AI tool often started with a friendly explanatory sentence, almost like ChatGPT’s style. “You’ll see the model is very eager to help, in a way that bleeds through,” I noted, “because it was trained on support chat transcripts, it always says like ‘Sure, I can help with that!’”. This was an aha moment – the Gen AI Tool is not neutral; it carries the imprint of its training. We realized that prompt designers have to sometimes fight the model’s built-in style (or leverage it) depending on the goal. Everyone found it intriguing that even with a “system prompt” persona in place, the underlying chirpy assistant personality of the model could peek out.

Multilingual, Ethical and Cultural Reflections: One of the outputs for the Gen Z Pirate inventing a new word was a combination of apparent phonemes from Arabic. The model claimed “rad” meant “deep” in Arabic. A quick search in a dictionary gave us a more nuanced etymology which contradicted this purported (and very confident!) assumption. Perhaps the most meaningful conversation came when a participant connected our playful exercise to real-world issues. During the Bart Simpson round, I said (paraphrasing): “This is hilarious, but it makes me think – with Bart’s voice being generated, what about the writers’ and actors’ strike going on? The WGA is striking about AI usage. If an AI can produce dialogue in Bart Simpson’s voice, are writers even needed anymore?” This prompted a thoughtful group discussion about labor and creativity. We acknowledged the ongoing Writers Guild of America strike and SAG-AFTRA concerns – they were, at that time, actively negotiating limits on AI in scripts and voice acting. Allowing the usage of Bart Simpson (a copyrighted character with a famous voice actor) really brought that home. Participants connected this labor action to the evening’s prompt battles—recognizing that prompt engineering is itself a form of creative labor, and that unregulated AI could devalue or displace human writers. One student noted: “Seeing us all jockey for best prompt made me realize: prompting is a skill—and if studios automate it with AI, real writers’ jobs could be at risk.”Our consensus, in line with what our co-facilitator Zach articulated, was that Gen AI is a tool, not a replacement for human writers. The outputs we got were fun, but clearly not polished scripts – and they originated from our prompts and ideas. As Zach put it during the discussion, “We still need someone to come up with the idea and refine these outputs. It’s not going to replace an entire profession. This exercise hopefully shows that.”

In fact, one participant noted that many of the best AI-generated words would need a human to actually define them more coherently or decide where to use them. This conversation tied the bow on our event: even in a lighthearted “joust,” issues of authorship and ethics are never far away. By experiencing what the AI could and couldn’t do, the group came to appreciate the importance of human creativity and judgment in the loop.

Technical Curiosity & Privacy Awareness: Many asked how to explore Replicate on their own (without exposing private data), evidencing newfound vigilance about what to feed into AI systems and where those inputs go.

In summary, the Prompt Battle-Off was not just silly fun (students called it “the most fun I’ve had with AI.”); it was also an educational crash course in prompt engineering, model behavior, and the social implications of AI. People left the room with a better understanding of how to get the outputs they want (and an appreciation for the outputs they didn’t expect). As one attendee told me afterward, “I feel like I understand AI’s strengths and weaknesses much better now – and I’m less afraid of it.” Mission accomplished!

Prompt Battles Beyond (Related Work)

Toward the end of our session, someone asked me if these kinds of prompt battles happen elsewhere. The answer is yes – there was a growing trend of “prompt competitions” popping up in universities, industry, and art spaces. A design agency in Berlin, MetaDesign, hosted an after-work AI Prompt Battle where colleagues competed to create the best AI-generated images on a given theme (in their case, “what fashion will look like in 100 years”). In March 2024, ACMI (Australia’s national museum of screen culture in Melbourne) held a live Prompt Battle event as part of a photography festival. Artist Sebastian Schmieg invited audience members on stage for a head-to-head Midjourney image generation battle. This format, originally developed at HTW Dresden in Germany. The Wisconsin Alumni Research Foundation and the UW-Madison Data Science Institute hosted “MadPrompts,” a generative AI prompt battle

Prompt Battle-Off Reproducibility Kit (Run Your Own Prompt Joust)

For those interested in replicating this workshop format, I’m happy to share a “Prompt Battle” reproducibility kit. Here is the run-of-show template, facilitator tips, sample system prompts, and a list of materials/tech setup you’ll need (projector, laptops, access to an AI model, etc.).

Instructor Prompt Battle-Off Workshop Kit

1. Workshop Overview

Title: Prompt Battle-Off

Duration: 1 hour 30 minutes

Audience: Faculty, librarians, students, and staff with minimal AI background interested in Writing & Learning using Gen AI Tools.

Learning Goals:

- Understand prompt engineering and large language model (LLM) behaviors.

- Identify bias, hallucinations, and authorship concerns in AI outputs.

- Participate in Hands-on collaborative, low-stakes competition to enhance AI literacy.

2. Run-of-Show Template

|

Time |

Activity |

Steps |

|

0:00–0:15 |

Introduction & Welcome |

Review Ethical Guidelines & Code of Conduct; Outline Rules & Scoring |

|

0:15–0:20 |

Team Formation |

Divide into Team A and Team B; Distribute team name cards |

|

0:20–0:30 |

System Persona Design |

Teams create a system prompt; Use persona template cards |

|

0:30–0:50 |

Round 1: Invent a New Word |

Creative Writing Prompt: "Invent a new word that describes ___." Teams draft input prompts |

|

0:50–0:53 |

Round 1 Submission |

Volunteers enter prompts into LLM textbox |

|

0:53–0:58 |

Output Judging & Analysis |

Project side-by-side outputs; Discuss tone, creativity, bias, clarity |

|

0:58–1:05 |

System Persona Tweak for Round 2 |

Teams refine or swap system prompts |

|

1:05–1:10 |

Round 2: Worldbuilding Prompt |

Creative Writing Prompt: "Develop a vivid setting for ___." Teams craft input prompts |

|

1:10–1:13 |

Round 2 Submission |

Teams enter prompts into LLM |

|

1:13–1:18 |

Output Judging & Analysis |

Compare outputs: detail, immersion, persona fidelity |

|

1:18–1:25 |

Optional Additional Rounds |

Run Round 3 or move to winners |

|

1:25–1:28 |

Announcement of Winners |

Tally votes/applause for best prompt+persona combos |

|

1:28–1:35 |

Group Discussion & Debrief |

Use Guiding Questions |

|

1:35–1:40 |

Wrap-Up & Next Steps |

Share Resources, Collect feedback |

3. Technical Requirements & Setup

- Hardware: 2+ laptops with internet, projector or monitor.

- Software: Access to an LLM endpoint (Replicate.ai, OpenAI).

- Configuration:

- Model: Chat GPT mini, LLaMA-3 variant or equivalent

- Settings: Temperature 0.7, Max tokens 200 - Accessibility: Ensure screen-reader compatibility, large-print materials, captioned display.

4. Materials & Templates

4.1. Prompt Templates

4.1. 1.System Persona Templates:

- "[Context] You [Persona] are a [role] who [quirk/expertise]. Describe ___ [Tone] in your voice."

4.1.2. Input Prompt Starters:

- Invent a new word that describes [feeling/experience].

- Describe [setting] in vivid detail.

- Write dialogue between [characters] about [topic].

- Mash-up genre/voice, e.g., noir detective on Mars.

4.2. Sample Creative Writing Prompts:

- Unreliable Narrator Perspectives

- Time / Word count-limited Challenge

- Stream of Consciousness

- Epistolary

- Alternate Universe

- Magic / Science Concept Creation

5. Debrief & Discussion

Guiding Questions:

- Prompt Precision & Impact: How did phrasing shape outputs? Which constraints yielded richer results?

- Bias & Ethics: Did you spot stereotypes or omissions? How might these outputs reinforce bias?

- Authorship & Labor: Who “owns” the output? What does this imply for creative work and AI in writing?

- Model Quirks & Limits: Where did the AI hallucinate? How would you refine prompts/settings?

- Future Applications: How could you adapt this format for your teaching or discipline?

6. Evaluation & Feedback

- Participant Survey: Likert-scale questions on engagement, learning outcomes, and confidence.

- Facilitator Reflection: Checklist for pacing, technical issues, and discussion depth.

- Success Metrics: Number of prompt iterations, quality of debrief insights, survey feedback.

Do let me know if you use this format, if it worked out for you, how you made it your own, etc.

Thank you!

On that note, I’ll end with a hearty thank you to everyone who participated in the Prompt Battle Off. It was exhilarating, enlightening, and above all a team effort. This blogpost is late in coming, but has been a valuable space for reflection. Thank you to the Kelly Writers House team for their generosity in hosting and spirit.

(P.S. If you’re curious to see the Prompt Battle-Off in action, check out the event recording on YouTube: Prompt Battle-Off with GPT Joust – Sept 7, 2023 with Timestamps. It’s edited for length, but you can catch many of the highlights and our commentary.)*

Note of Acknowledgement: This blog post has been proofread, spell-checked, and made accessible using Gen AI tools.